Blog

The Avoidable Downsides of Sample-Based Migration Testing

Sample-based testing has been a staple of quality organizations in life sciences for decades. In some cases, it makes perfect sense to use and, absent other options, it can be made to work.

The viability of sample-based testing lies in its assumptions. That is, if you are looking for errors with a probability of occurrence uniformly distributed across the things you are testing, you’re on the right track. For example: In the case of gradually-degrading equipment at a pharmaceutical manufacturing plant, it’s easy to select a sampling of products to test the performance of the production machines. You’re able to see the quality degrading in the creation of the tablets and can isolate the issue, fix the problem and resume production.

However, data managed in an information system is poorly modelled with this assumption and anyone who has migrated data/content and used sample-based testing intuitively understands this shortcoming. For example, you could rerun a sample-based migration test five times, using different data or documents and come up with five different results. That is inherent proof that the test itself has shortcomings.

Because sample-based testing of data migrations is unpredictable, it can be unsuitable for GxP system migrations and can very easily delay the timeline and increase the cost of a migration project. When a company tests by sampling data, they will take a small representation of their data/document sets across different document types and manually test them to see if there are any gaps or anomalies. And, as stated above in a different way, depending on which data/documents you pick, you could and probably will get different results each time, making predictability almost impossible.

Just some examples of what makes testing difficult can include:

- The legacy system had no data in a field that’s required in the new system.

- One field in the legacy system might become two or more fields in a new system.

- Multiple users entering data inconsistently in the same legacy field that then needs to be unified and made consistent in the new system.

- Field sizes are different across old and new systems.

If and when the differences are discovered, IT will need to work with the business to discuss next-steps to remedy the gaps or anomalies. Still, after those issues have been resolved and a solution has been found, the team will sample again to ensure things now are error-free. But, it’s extremely common for even more issues to come to light during this second sampling and they’re most likely different issues. Now the entire process of working with the business and creating a solution must be repeated as long as it takes until some comfort is reached, or time for testing simply runs out.

How many times will IT have to repeat this process? The answer is uncertain and depends on the results of each prior sample-based test as well as the quantity and the overall quality of the data. Most people plan on sampling twice, but our experience shows it will take from five to nine times to run samples. Because the number of times varies, the “unknown” factor raises concerns among all parties and throws the project timeline and budgets out the window. Plus, there is an increased possibility that there will be errors during Validation, and it’s very likely that the Production run will include unknown errors, something that’s at the least embarrassing and could raise compliance or quality concerns as well.

The Future of Data Migration Testing:

Why It’s Good to Break with Tradition

Looking for a successful migration testing use case? Look no further than this project at a top-tier pharmaceutical corporation.

The Alternative: Automated Testing For 100% Migration Verification

It’s not enough to plan a project timeline based on the quantity of documents. IT also must consider the legacy and new systems as well as the quality of data. Once the onion layers are peeled back, the project can seem more challenging.

Consider the previous time a migration was performed – let’s say that was ten years ago. That data may have been previously migrated from systems that are even older. When you consider data that’s decades old from systems that are decades old – sampling doesn’t seem capable of completing successful testing in the allotted period of time.

Automated testing, alternatively, delivers predictability by testing ALL data and finding issues earlier in the process. This allows solutions to be implemented quicker and more predictably, providing the peace of mind to move on and complete the migration project on time and within budget.

Why 100% Migration Testing is a Better Choice?

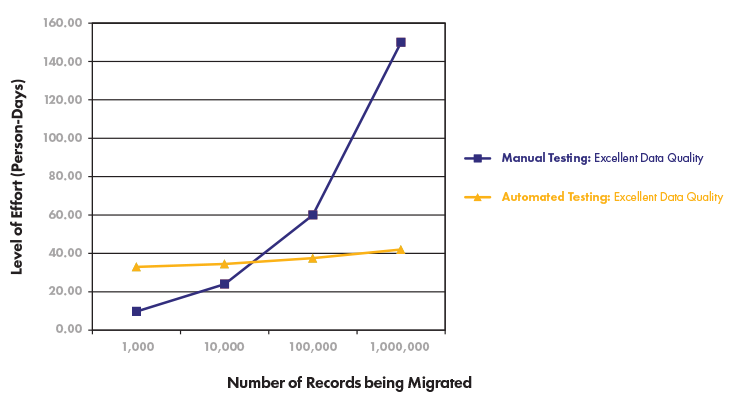

If you want predicable results, automated data migration testing has proven to be the better way. In our experience, if you are migrating a small amount of data – fewer than 10,000 – 15,000 documents – then sampling may very well take approximately the same time to complete as automated testing. This means, for similar volumes or greater, you’re paying the cost of automated testing, but not getting the benefit. Very often however, migrations could include hundreds of thousands (or even millions) of documents. And, our study assumes high quality data, which is normally not the case.

If you could test 100% of your data to ensure it would all migrate successfully, would you? Of course! If you’re doing manual sample-based testing, but paying the price for automated testing, why not just do 100% automated testing in the first place? The answer might be that you never expect to take the five to nine iterations that are typical of GxP data.

Finding and overcoming problems manually can slow down the project as more and more roadblocks are uncovered. Automating this process protects the project deadline and helps avoid having to give management bad news about delays or cost overruns.

Once again, this method is much more predictable and “makes sense” more often than one might think. The fact is that an automated migration and testing processes supported by the right methodology can save time, improve efficiency and quality while reducing risks associated across a broad set of migration challenges.

Access the free whitepaper to read more about automated data migration and 100% migration testing.

Download: When Do Automated Data Migration and 100% Testing Make Sense?